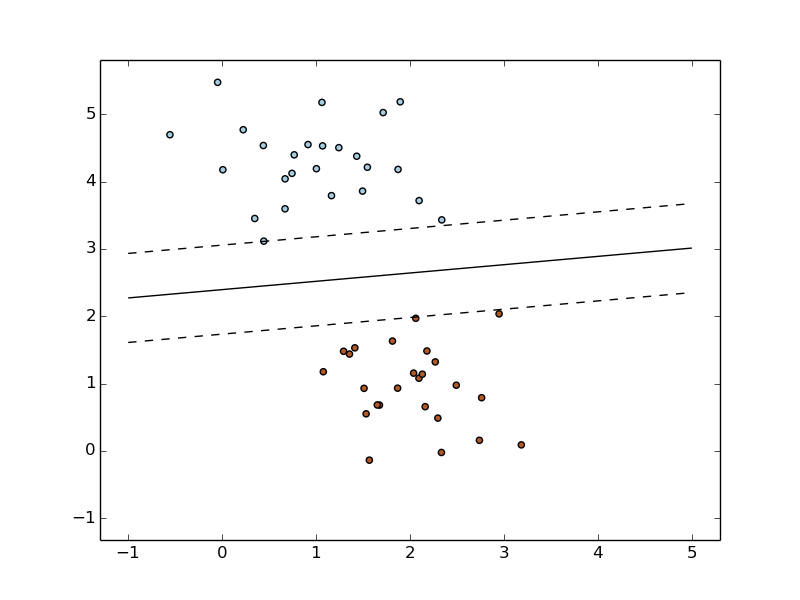

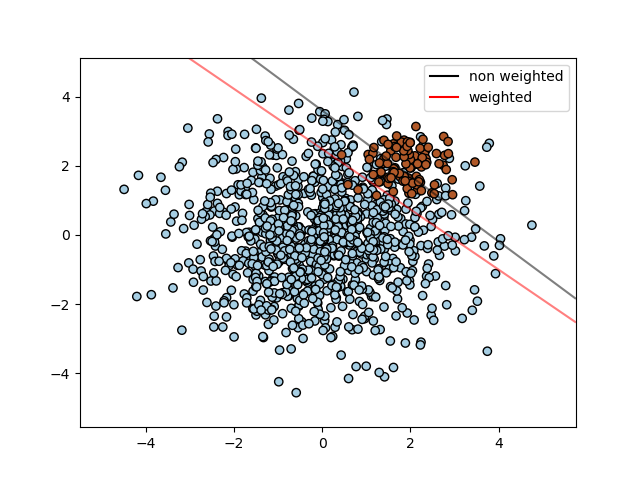

Wolfram Language & System Documentation Center. We first find the separating plane with a plain SVC and then plot (dashed) the separating hyperplane with automatically correction for: unbalanced classes. The lines you see on the plot above are not the hyperplane wTx. "Hyperplane." Wolfram Language & System Documentation Center. SVM: Separating hyperplane for unbalanced classes Find the optimal separating hyperplane using an SVC for classes that: are unbalanced. Lets discuss how the weights w relate to the slope of the decision boundary. Wolfram Research (2015), Hyperplane, Wolfram Language function. Plot the maximum margin separating hyperplane within a two-class separable dataset using a Support Vector Machine classifier with linear kernel././images/. the hyperplane parameter values to those found during the fitting process. The support vectors are the highlighted points lying on. If we plot this, we should be clearly able to separate the setosa species from the.

#PLOT HYPERPLAN CODE#

All of my code works except for the 3D hyperplane which is supposed to be there but isn't. The only difference is that we have the hinge-loss instead of the logistic loss.įigure 2: The five plots above show different boundary of hyperplane and the optimal hyperplane separating example data, when C=0.01, 0.1, 1, 10, 100.Cite this as: Wolfram Research (2015), Hyperplane, Wolfram Language function. These functions produce helpful 2d and 3d diagnostic plots for post hyper.fit. The plot below shows the optimal separating hyperplane and its margin for a data set in 2 dimensions. What is SVM Support Vectors Kernels Hyperplane Performance Tuning. I already made a working circular graph with datapoints and have even managed to add a z-axis and make it 3D to better classify the datapoints linearly with a 3D hyperplane. d 0.05 generate grid for predictions at finer sample rate. sv mdl.SupportVectors set step size for finer sampling.

function svm3dplot (mdl,X,group) Gather support vectors from ClassificationSVM struct.

Setting: We define a linear classifier: $h(\mathbf,b$) just like logistic regression (e.g. Below is the updated function for any others looking for the same basic framework. You can find the coefficients (and ) using the two equations below.Quoting from 'Support-Vector Networks' by Cortes and Vapnik, 1995, '. The SVM finds the maximum margin separating hyperplane. The Perceptron guaranteed that you find a hyperplane if it exists. The Support Vector Machine (SVM) is a linear classifier that can be viewed as an extension of the Perceptron developed by Rosenblatt in 1958.

0 kommentar(er)

0 kommentar(er)